-

Notifications

You must be signed in to change notification settings - Fork 39

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

[Question]: What are the definitions of the different stages? #98

Comments

|

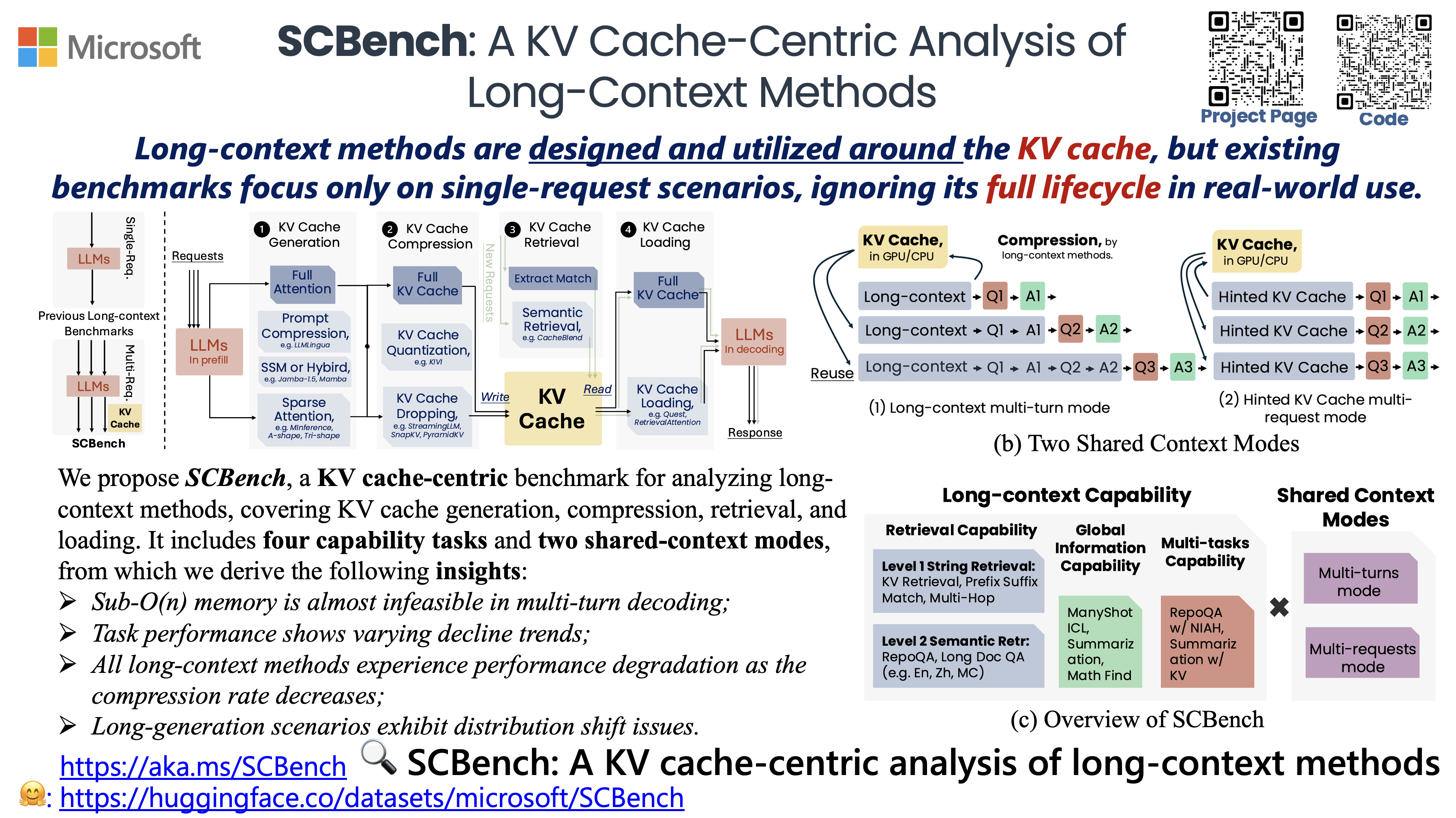

Hi @crazyofapple, thanks for your interest in our work. These four stages are part of the KV cache lifecycle that we recently introduced in SCBench. You can find the details in Section 2 and Figure 1. |

|

Thank you. I read the iclr submission before. Thank you for your excellent code base and timely response. |

|

@iofu728 choices=[ |

|

Hi @crazyofapple, thanks for your question, and apologies for any confusion caused by unclear code.

Let me know if you have further questions! |

|

Thank you very much for your answer, which solved my question. BTW, I would like to ask about your VLLM version (mine is vllm==0.6.5, torch==2.5.1, python 3.12.4), because my current vllm_minference will report some errors. |

|

Also, I can't find a python package for |

|

Hi @crazyofapple, I’ve been testing locally with vllm==0.6.0. I’ll check the higher versions soon. Could you share your error log? I’ll double-check if the specific error matches when I look into it later. As for |

|

Many thanks for the reply. I changed to your version and it worked. The following is the error message for the higher version. `[rank0]: Traceback (most recent call last): [rank0]: The above exception was the direct cause of the following exception: [rank0]: Traceback (most recent call last): |

|

@iofu728 BTW, I see that the results in your paper are all from a single method. Have you tried to combine different types of methods? If so, what did you find? If not, do you think this combination will have a future? I saw the improvement of snap+minference in the original minference paper, but it seems that there is no large-scale empirical result of the combination. |

|

Hi @crazyofapple, That’s a great question! We haven’t conducted detailed research in this area, but I personally believe there’s potential for exploration and some interesting insights might be uncovered. Currently, aside from the MInference w/ SnapKV results in MInference, there’s also MInference w/ ShadowKV provided by ShadowKV for reference. |

Describe the issue

I would like to ask politely, what are the definitions of the different stages here? How to distinguish them? I am a novice in this field

ATTN_KV_TYPES=(

"vllm;dense" # FullAttention

"vllm_minference;dense" "vllm_a_shape;dense" "vllm_tri_shape;dense" # 1) KV Cache Generation Stage

"dense;streamingllm" "dense;snapkv" "dense;pyramidkv" "dense;kivi" # 2) KV Cache Compression Stage

"vllm_blend;dense" # 3) KV Cache Retrieval Stage

"dense;quest" "dense;retr_attn" # 4) KV Cache Loading Stage

)

The text was updated successfully, but these errors were encountered: